If you’re reading this article, I assume

- you know what Django is

- you know what Github and Github Actions are

- you have a server that is running your Django Application

- you wanna run your code tests on Github before deploying

- you know how yaml file format works. Indentation is super critical in yaml, just like it is in Python.

If you know not, any of the above below, you may click on the links and read on them. You won’t be finding those info in this article.

With the prerequisites outta the way, let’s get straight into the tutorial.

Test & Deploy Github Action

This is the whole github actions yaml file. Take a quick glance through, and in the following section, consider each part and how they come together to get a complete working solution.

name: Test and Deploy

on: [push]

jobs:

build-test:

name: build and test django app

runs-on: ubuntu-latest

services:

postgres:

image: postgres:12

env:

POSTGRES_USER: ${{ secrets.POSTGRES_USER }}

POSTGRES_PASSWORD: ${{ secrets.POSTGRES_PASSWORD }}

POSTGRES_DB: ${{ secrets.POSTGRES_DB }}

ports:

- 5432:5432

# needed because the postgres container does not provide a healthcheck

options: --health-cmd pg_isready --health-interval 10s --health-timeout 5s --health-retries 5

steps:

- uses: actions/checkout@v2

- name: Set up Python 3.9

uses: actions/setup-python@v2

with:

python-version: 3.9

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Management commands

run: |

export DATABASE_URL='postgres://${{ secrets.POSTGRES_USER }}:${{ secrets.POSTGRES_PASSWORD }}@localhost:5432/${{ secrets.POSTGRES_DB }}'

python manage.py migrate

- name: Run tests

run: |

export DATABASE_URL='postgres://${{ secrets.POSTGRES_USER }}:${{ secrets.POSTGRES_PASSWORD }}@localhost:5432/${{ secrets.POSTGRES_DB }}'

python manage.py test

deploy:

needs: build-test

name: Deploy to server

runs-on: ubuntu-latest

steps:

- name: executing remote ssh commands using ssh key

uses: appleboy/ssh-action@master

with:

host: ${{ secrets.HOST }}

username: ${{ secrets.USERNAME }}

key: ${{ secrets.KEY }}

port: ${{ secrets.PORT }}

script: |

/home/server/deploy/deploy_dev.sh

Warm Up

First of all, the intro part sets the ball rolling. The entire action has a name. The action will run when there’s a git push to the repository. You can have different conditions under which the script should run.

If by any chance, you do not want the github action to run, you can always add [skip ci] to your git commit, and for that particular commit the action will not be run.

Then specify the jobs. The first job is called build-test and given a name. Remember, if you wanna reference this build (which we’ll see in the following paragraphs), the build-test is what you’ll use. You can think of it as the var for this particular job

The runs-on indicates what OS image to use. You might wanna dial in exactly what version of Ubuntu to use a specific version, something like ubuntu:20.04

name: Test and Deploy

on: [push]

jobs:

build-test:

name: build and test django app

runs-on: ubuntu-latestServices

services:

postgres:

image: postgres:12

env:

POSTGRES_USER: ${{ secrets.POSTGRES_USER }}

POSTGRES_PASSWORD: ${{ secrets.POSTGRES_PASSWORD }}

POSTGRES_DB: ${{ secrets.POSTGRES_DB }}

ports:

- 5432:5432

options: --health-cmd pg_isready --health-interval 10s --health-timeout 5s --health-retries 5Services is a beautiful thing in Github Actions. You can use service containers to connect databases, web services, memory caches, and other tools to your workflow.

And database use case is exactly what you want. image allows you to dictate exact postgresql version you want.

Then with the env you do the needful related to the credentials for connecting. As the service creates the database, it will use those credentials to set up the initial details. Therefore later on when you’re connecting to it, you’ll use those same credentials to connect.

In this case, thanks to Github Action Secrets, all the needed credentials are dropped in on the fly, thus you do not need to hardcode them.

The ports, does, you know, the porting things, mapping and piping the port 5432 in the container to listen from outside on 5432. Typical containerization stuff.

The options part is cool too. It allows your application to only proceed after the postgresql service is up and cleared the health checks indicated.

Baby Steps

steps:

- uses: actions/checkout@v2

- name: Set up Python 3.9

uses: actions/setup-python@v2

with:

python-version: 3.9With steps, you now have the chance to express yourself in telling the build what steps to take.

In this section, using actions/checkout@v2 you’ll be cloning the repository, then setup a Python 3.9 environment.

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install -r requirements.txt

- name: Management commands

run: |

export DATABASE_URL='postgres://${{ secrets.POSTGRES_USER }}:${{ secrets.POSTGRES_PASSWORD }}@localhost:5432/${{ secrets.POSTGRES_DB }}'

python manage.py migrateNext, you’ll be installing dependencies. Typical Pip environment setup process. The requirements.txt file is available because remember the repository has been cloned on the container.

Under the Management commands, you export the DATABASE_URL environment var with all the secrets passed in. This export is important, so that when Django runs during the next line python manage.py migrate, it’ll have access to the DATABASE_URL for use

The migration then happens in peace!

- name: Run tests

run: |

export DATABASE_URL='postgres://${{ secrets.POSTGRES_USER }}:${{ secrets.POSTGRES_PASSWORD }}@localhost:5432/${{ secrets.POSTGRES_DB }}'

python manage.py testSame as previous step, export the DATABASE_URL, and then run the tests.

Now deploy…

deploy:

needs: build-test

name: Deploy to server

runs-on: ubuntu-latest

steps:

- name: executing remote ssh commands using ssh key

uses: appleboy/ssh-action@master

with:

host: ${{ secrets.HOST }}

username: ${{ secrets.USERNAME }}

key: ${{ secrets.KEY }}

port: ${{ secrets.PORT }}

script: |

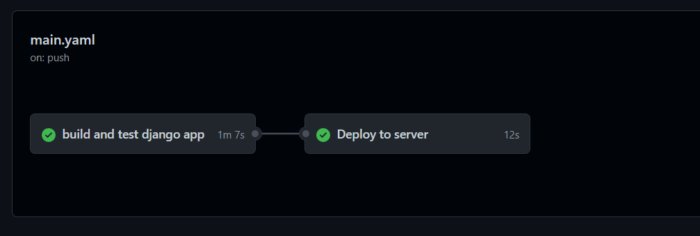

/home/server/deploy/deploy_dev.shOnto another job in the jobs. The first job was build-test, this job is deploy

But, the needs: build-test is there to prevent this deploy job from running UNTIL the build-test succeeds. IF the build-test fails, the deploy won’t run.

Without the needs, immediately the action begins, the available jobs will all run parallel to each other, which isn’t what you want.

Over here, you’re using the appleboy/ssh-action@master action. You pass in the related secrets necessary for connecting to your server.

Then for the last line, after ssh’ing into the server, you run the script, deploy_dev.sh which in your case, contains details on the steps to pull the latest code changes, and restart, say, gunicorn.

When the action runs and all checks out, you should see greeeeeens!

Conclusion

So that’s about it. Thanks for joining this one, and hope to see you in the next